Unlocking Insights from BUSLIB-L: Using AI to Deep Dive into a Popular Business Librarian Forum

Introduction: A Journey into AI Research Using Librarian Discourse

Like many working in the data space, I’ve been closely watching the rise of artificial intelligence with both enthusiasm and curiosity about how it might redefine the way I approach my role as a founder. While I’m not a data scientist or researcher, I’ve increasingly felt the pull to get hands-on with AI tools to see how drastically they might reshape our day-to-day workflows.

At Dewey Data, we partner closely with academic libraries, but as someone who isn't a librarian myself, I’m always looking for ways to better understand the challenges they face—especially around data access, research support, and instruction. This personal curiosity collided with professional relevance and sparked an idea: could AI help me surface meaningful insights from a real-world dataset created by academic librarians themselves?

So, I set out to create and analyze a unique, real-world dataset. BUSLIB-L - a long-running ListServ I’ve followed for several years - is a treasure trove of domain-specific discourse, rich with the kinds of questions, concerns, and collaboration that reflect the realities of library work. My goal in analyzing this archive with AI was first and foremost to test the practical capabilities of these tools, and in the process, learn more about the nuanced needs of one of our most important audiences.

Methodology and Scope

This blog post presents an exploratory analysis of BUSLIB-L messages collected from an XML archive containing over 10,000 entries. There are many more meaningful insights to be extracted, but I wanted to focus on sharing those that may be relevant or insightful for the BUSLIB-L members who make this forum valuable.

Using a combination of Python scripting and AI-assisted natural language processing tools from ChatGPT, I:

- Parsed and structured the message data

- Removed automated digests, signatures, and unsubscribes

- Isolated original posts (as opposed to replies)

- Applied clustering algorithms and rule-based keyword classification to identify recurring themes

A word of warning: This analysis is not peer-reviewed research. It was conducted as a self-guided learning project aimed at exploring the practical applications of AI for domain-specific content. Rather than analyzing the entire historical archive, I focused on the past nine years. Still, the patterns uncovered were interesting, and the project gave me a newfound appreciation for the power of AI tools.

Posting Volume and Engagement

Analyzing over 4,500 original messages revealed the high level of activity and collegiality within the BUSLIB-L community. Original posts are consistently followed by replies (often in a 2:1 ratio), suggesting a high degree of responsiveness and peer support.

Peak posting periods align with the academic calendar—especially early semester months and summer planning windows. And the most active contributors tend to be from R1 institutions.

I was also impressed to see the consistency of engagement over time. I would have assumed that a ListServ would lose participation over time, as newer, modern communication options emerge. However, there is likely a high “switching cost” associated with leaving such a valuable corpus of data behind. Email doesn’t seem to be going anywhere.

For a vendor like us, this analysis underscored just how much librarians rely on one another for technical problem-solving, instructional advice, and platform navigation.

Thematic Structure of BUSLIB-L

Using keyword clustering and text pattern recognition, I began to tag each original post by theme. After refining the categories through manual review, six themes emerged:

For us at Dewey, this classification was more than academic: it offered a framework for understanding what support librarians need most from their data partners. It was also helpful confirmation that data services challenges are on the rise in academic libraries. This conclusion is similar to that of John Bushman, Lisa DeLuca, Michael Murphy, and David Frank, who found the same result in their research on academic data services.

Data Challenges in Focus

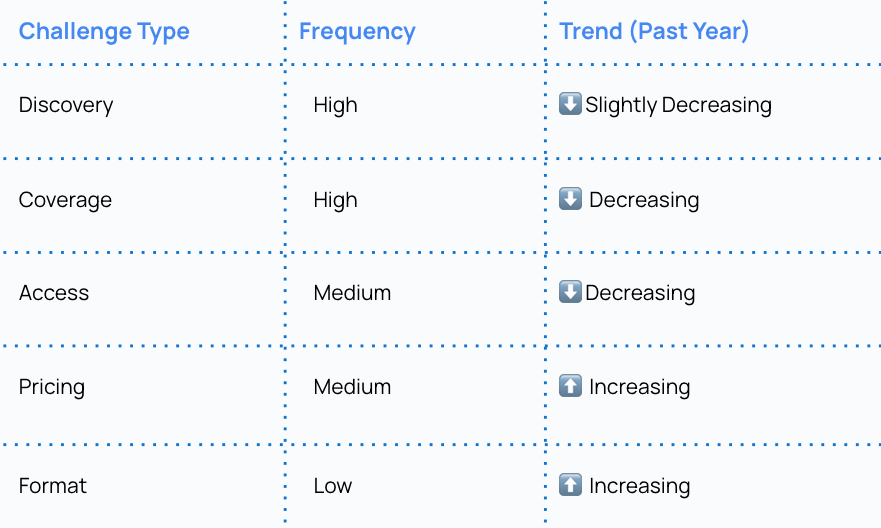

Because over one-third of all posts involved data issues, and this is where Dewey is building solutions, I dug deeper into this category. I tagged posts with challenge types such as discovery, access, pricing, coverage, and formatting.

Here is what emerged:

This breakdown echoed many conversations we've had with partners: locating relevant data remains difficult, but new pain points are emerging in pricing, especially amid federal budget cuts.

Key data-specific themes surfaced from posts include:

- Locating obscure financial datasets or ESG metrics

- Troubleshooting proxy access and login systems

- Understanding what "coverage" really means across tools like WRDS or Bloomberg

- Negotiating subscription pricing, especially under budget constraints

- Handling inconsistent or inaccessible export formats (e.g., CSV, Excel, API)

Vendor Mentions: Who Librarians Talk About

Another part of the analysis involved tracking vendor mentions. I wanted to know: which tools dominate the conversation?

The top 10 were:

- Mergent

- Bloomberg

- WRDS

- Statista

- S&P

- PrivCo

- Capital IQ

- PitchBook

- Orbis

- LexisNexis

These mentions weren’t just passing references. Often they were the source of long troubleshooting threads or teaching discussions. As a vendor, this was incredibly useful—it showed not only who is top of mind, but where expectations and frustrations are concentrated.

It’s a good reminder that clear terms, strong documentation, and a clean, modern user interface will go a long way in preventing common issues related to data access.

(Dewey was 30th on the list. I know it’s mostly because we’re a new vendor, but I like to think it’s because our product is so easy to use that no one needs to use a support forum 😉)

Wrapping Up

This project surfaced a range of ideas that could be explored more rigorously in future research or product work:

- How do topic themes shift over time and across institution types?

- What kinds of questions generate the most discussion and engagement?

- Can LLMs help classify sentiment or urgency in text-based threads?

For me, this exercise was more than a technical test. It was an invitation to listen more closely to the people we’re building for. I hope it sparks ideas—for librarians, vendors, and researchers alike, and I’d be happy to collaborate with anyone interested in diving in deeper.

If you’re interested in exploring this corpus of data, please reach out! I’d be happy to share.